I recently came across a paper on using AI models to detect and identify drone radio frequency signals. Here's a brief introduction.

The paper, "RFUAV: A Benchmark Dataset for Unmanned Aerial Vehicle Detection and Identification," is from Zhejiang Sci-Tech University and was published on March 18, 2025. The authors have open-sourced the AI models and dataset mentioned in the paper. The main contributions of the paper are the creation of a large-scale dataset, providing performance benchmarks for basic AI models like ViT, SwinTransformer, YOLO, ResNet, Faster R-CNN, EfficientNet, and MobileNet, and several signal processing techniques such as estimating bandwidth, center frequency, signal-to-noise ratio, and 3D spectrograms.

1. Data Collection

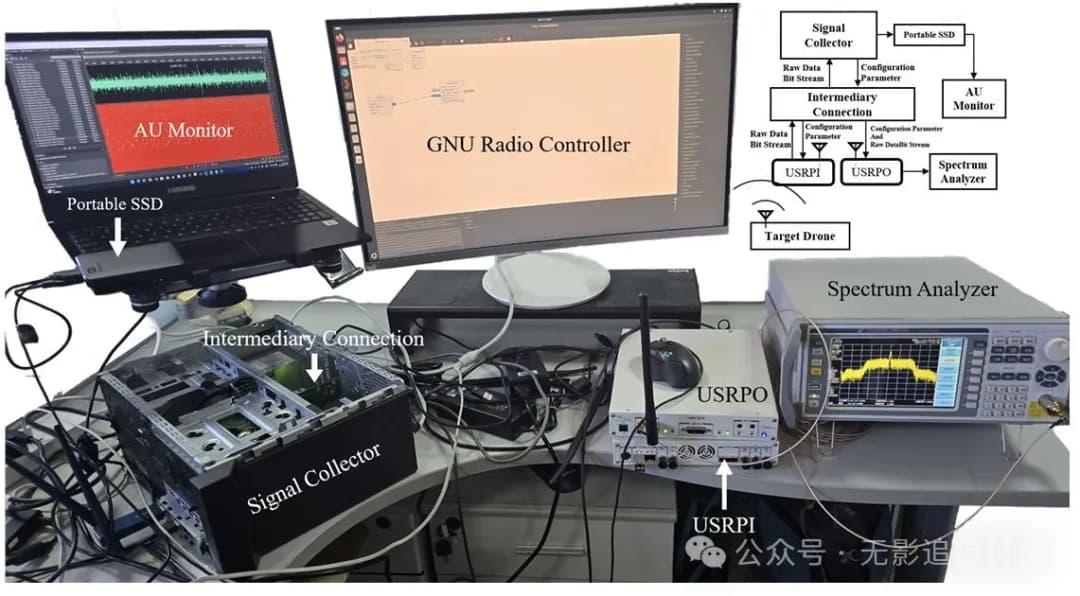

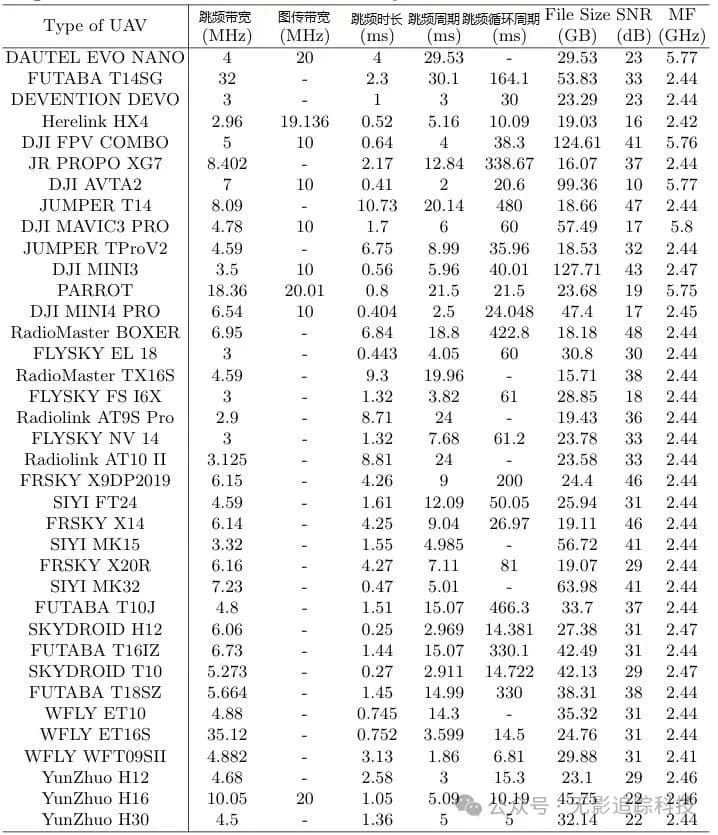

The authors first collected 1.3TB of data from 37 types of drones or remote controllers under different signal-to-noise ratios using a USRP X310.

2. Signal Analysis: STFT

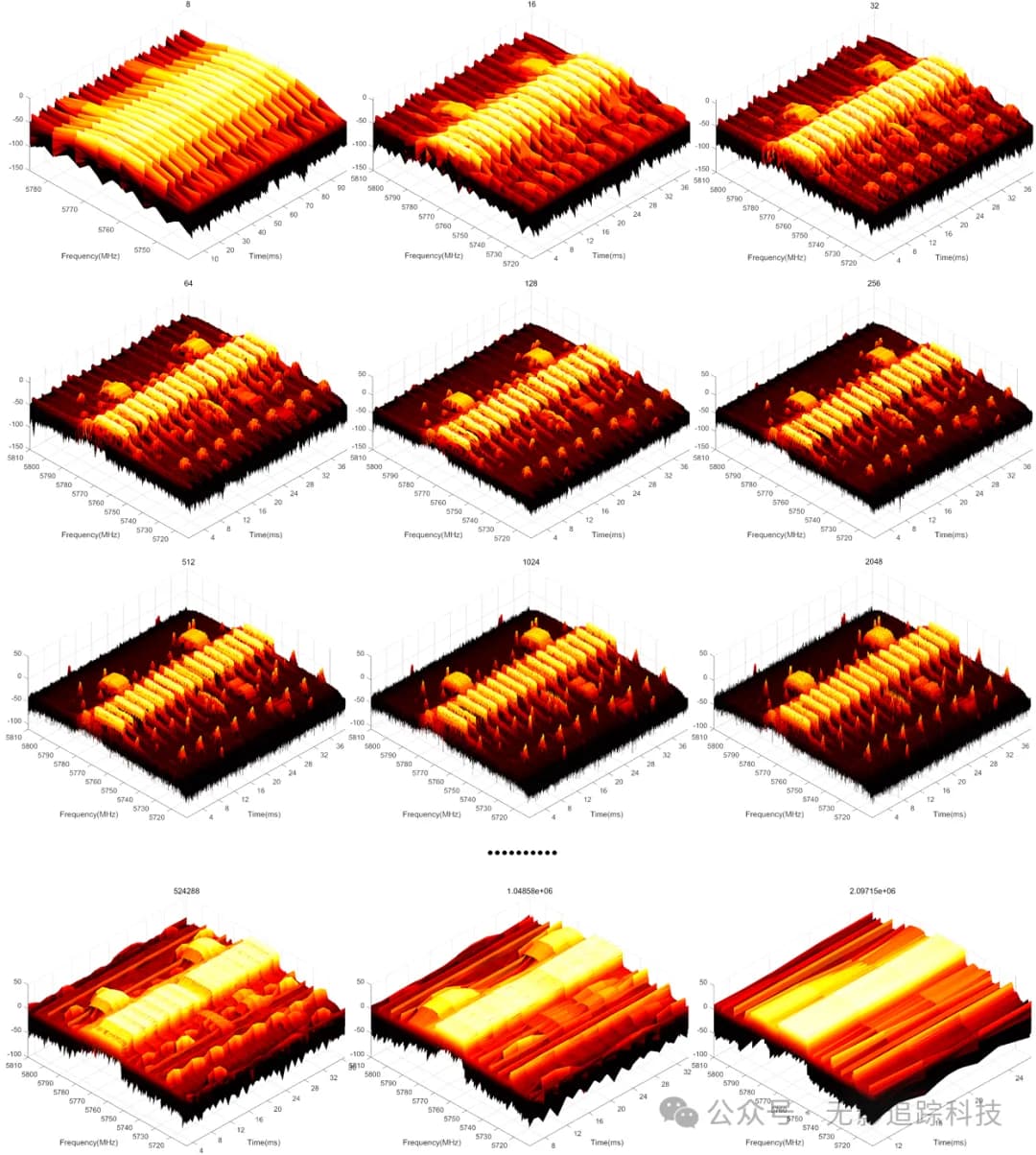

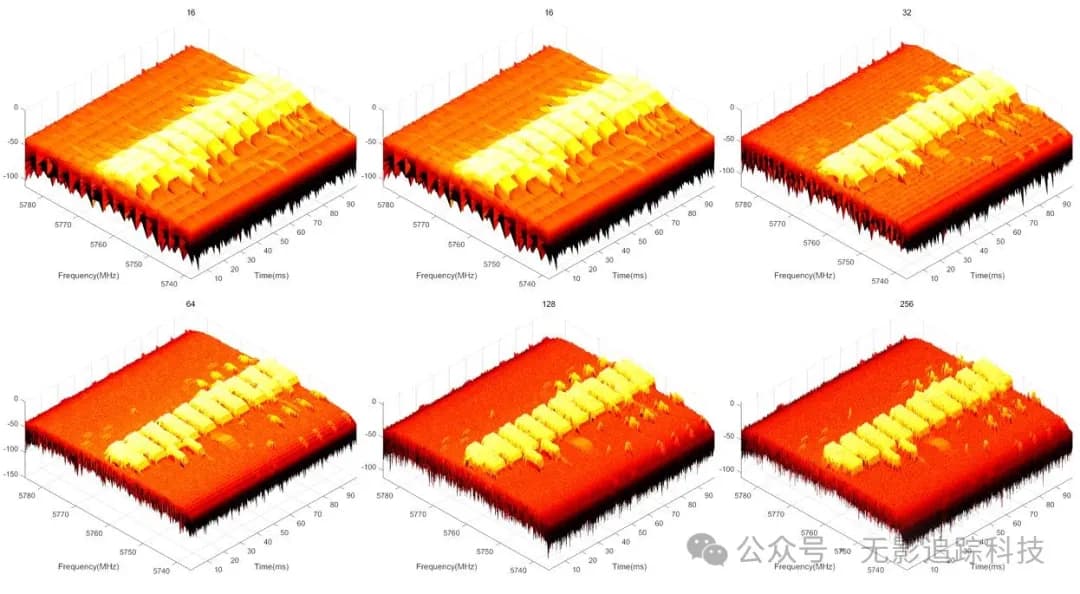

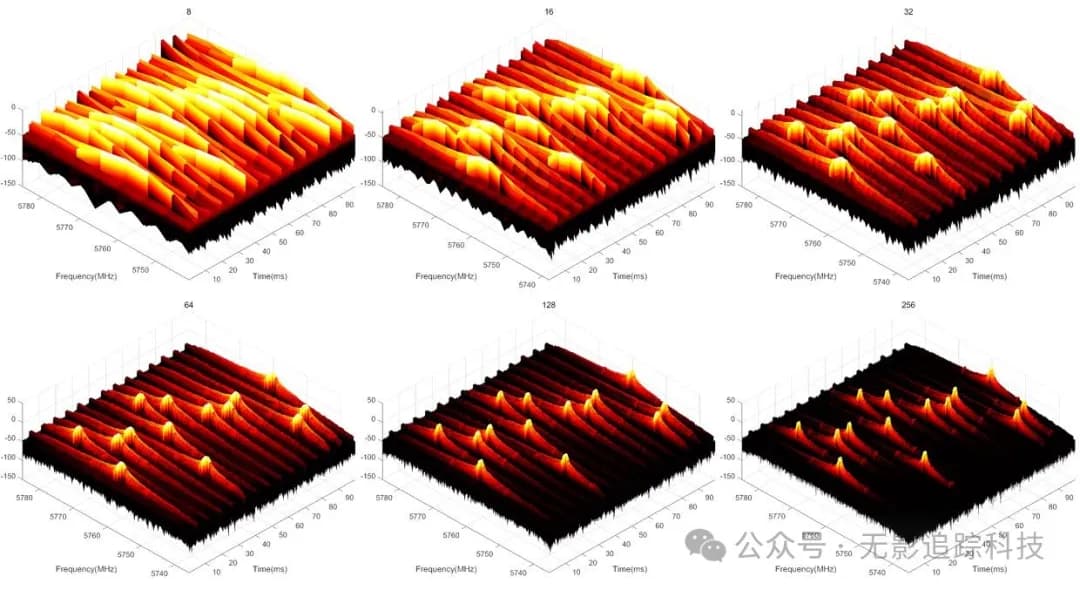

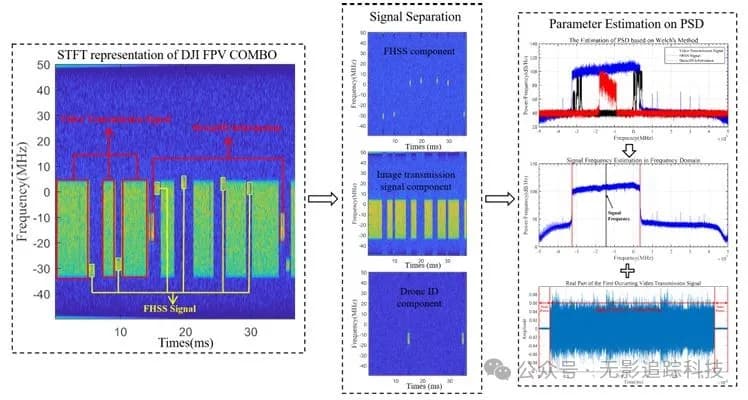

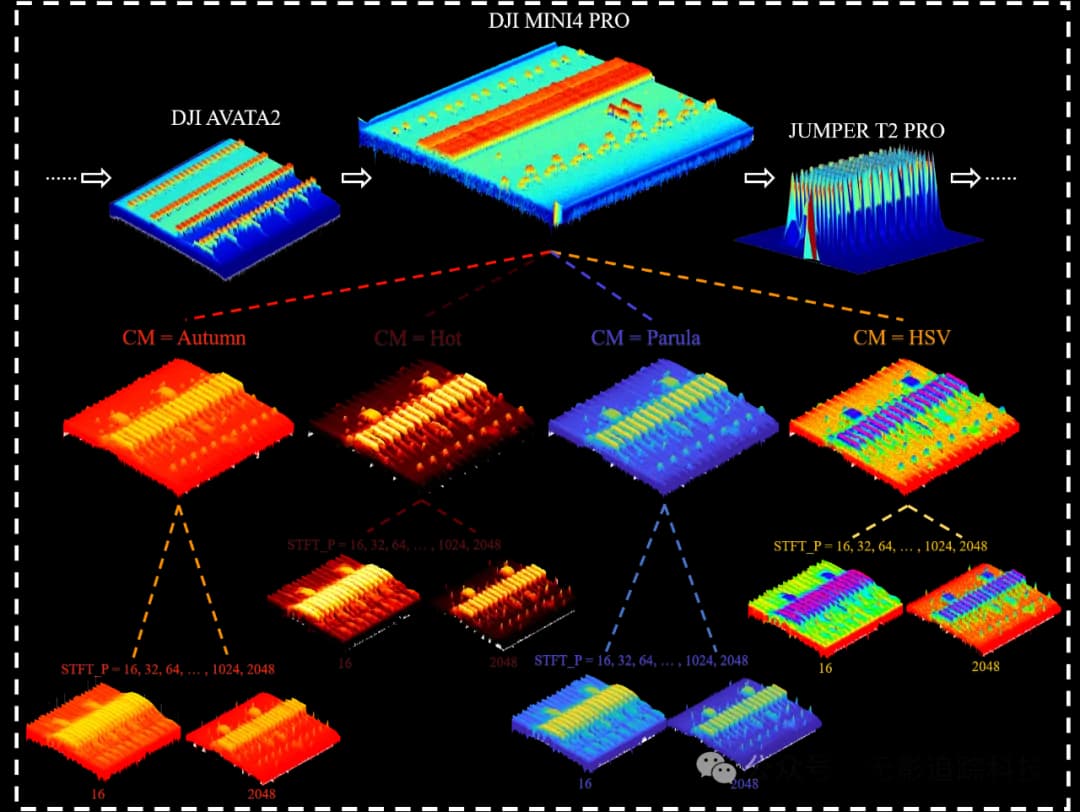

Next, they plotted the time-frequency diagram (STFT) of the IQ data, transforming the time-domain signal into the frequency domain to generate image data that AI is good at processing. Choosing the right number of FFT points is crucial. The figure below shows the STFT effect with different numbers of points. Too few FFT points result in low frequency resolution and weak signal energy. Too many points lead to low time resolution, and signals from multiple transmissions might merge, making it impossible to distinguish details.

3. Signal Feature Parameters

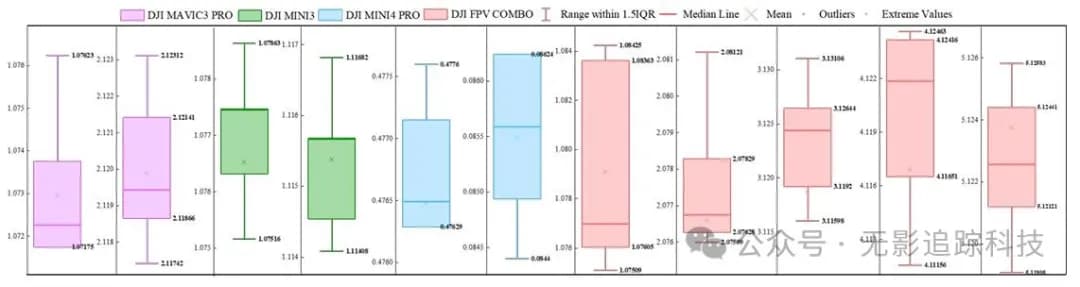

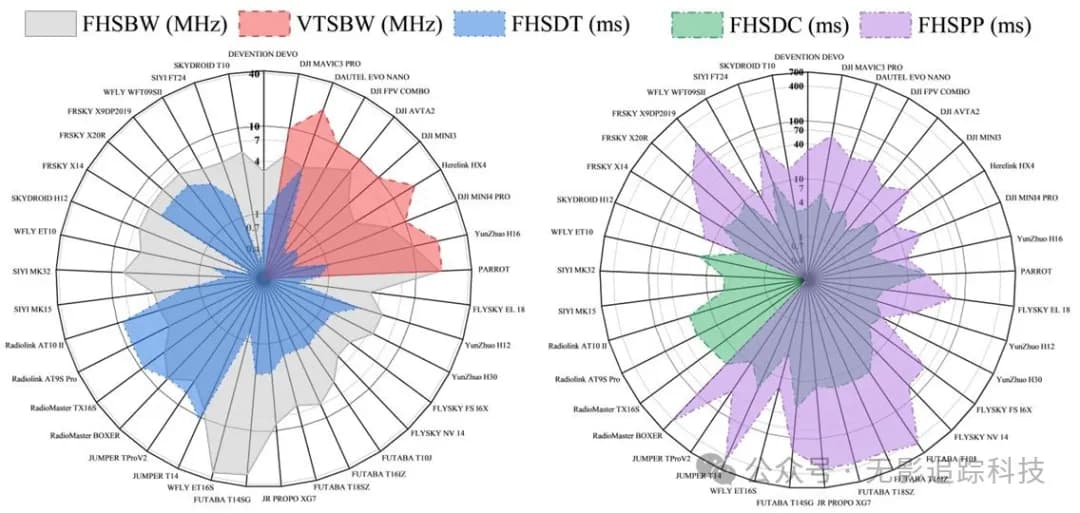

The authors analyzed the basic features of different drone signals. In the time domain, this includes the duration of each video transmission (ms) and the interval between transmissions (ms). In the frequency domain, it includes signal bandwidth and frequency hopping patterns.

4. AI Model Training

The AI model in the paper consists of two steps. The first step is to use YOLO to detect drone or remote controller targets in the STFT images, which means boxing out the signals. The second step is to use ResNet for a classification network to classify different targets, identifying whether it is a drone signal and which specific model it is.

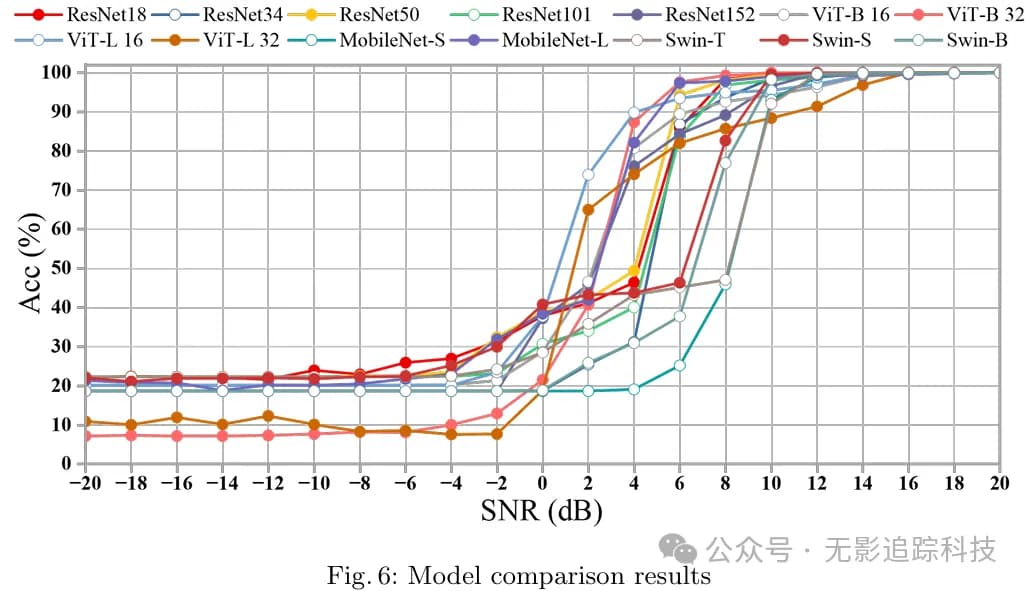

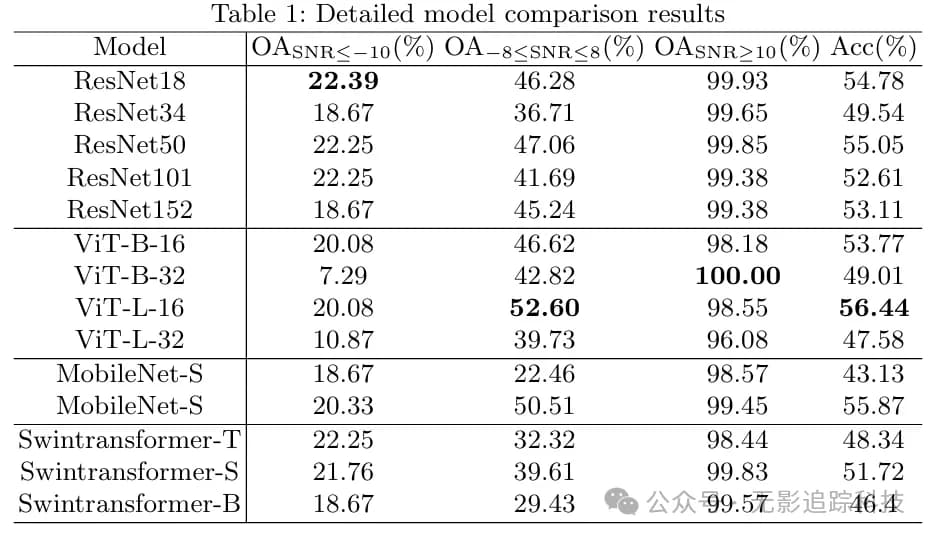

The paper compares the accuracy (Acc) of different AI models.

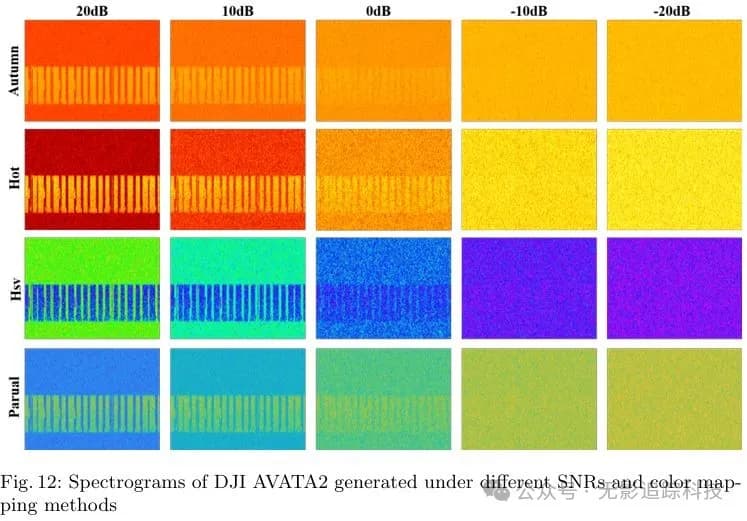

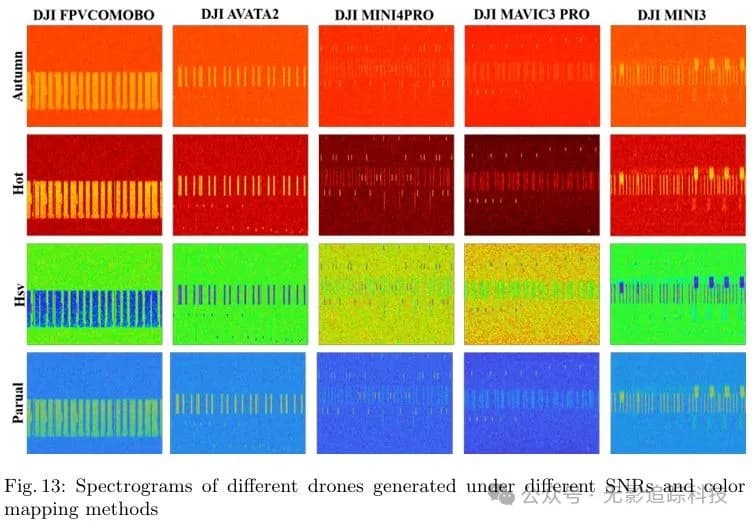

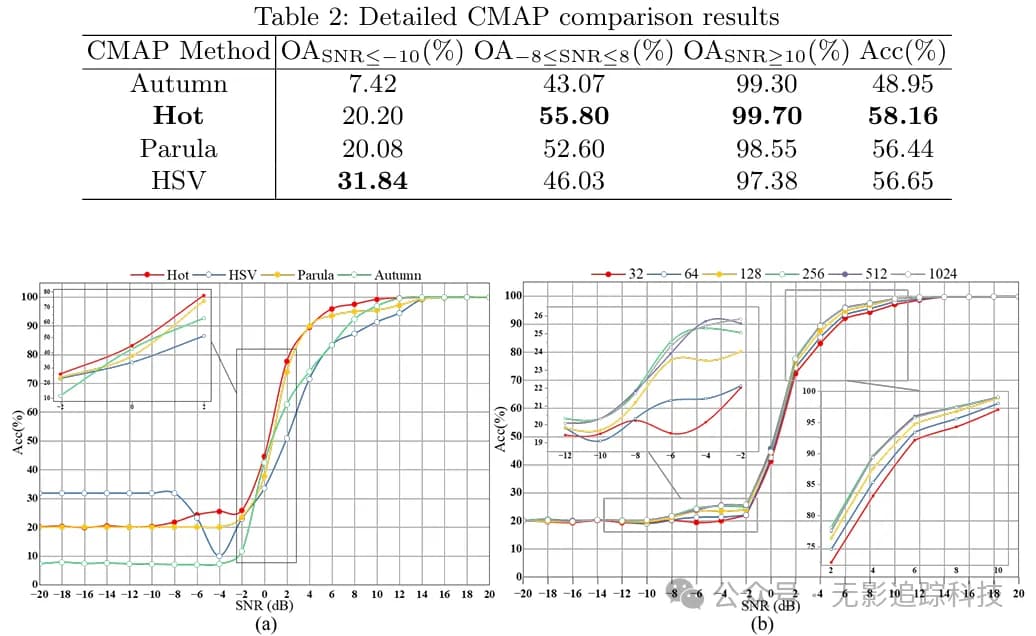

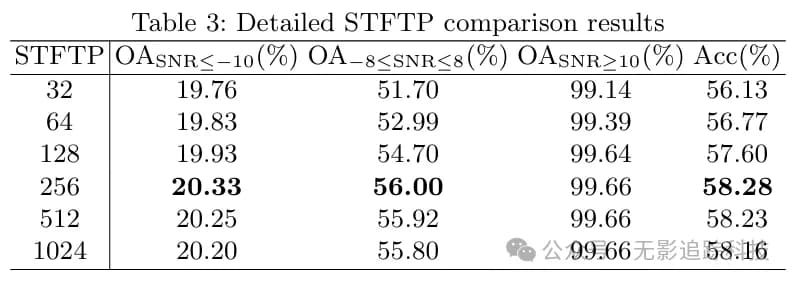

The paper suggests that different color maps for the STFT images affect the recognition rate (which I find a bit far-fetched). It also compares the overall accuracy (OA) of AI recognition for STFT images with different numbers of FFT points.

5. Running Effect

In my opinion, the greatest contribution of this paper is providing an excellent dataset with a sampling rate of 100M and high signal-to-noise ratios. The detection method offers some reference and inspiration. The author's training and validation, however, simulate different signal-to-noise ratios by artificially adding white noise, which I believe does not reflect real-world conditions. The biggest challenge in spectrum detection and identification is that communication in the actual ISM band is very frequent, and these clear blocks in the video are often obscured by other signals. Whether AI recognition can still maintain high accuracy needs to be verified.